Hubble Space Telescope's 20th Anniversary Google Doodle

Happy birthday, Hubble!

HubbleSITE was the Space Telescope Science Institute’s site for distributing press releases, images, and videos from the Hubble Space Telescope. On average, the site would get about 4 million hits a day. On days when we had a big press release go out, the traffic would spike to about 8 million hits a day. Traffic from news sites, Digg (this was the late 2000s), and later Reddit would drive a lot of visitors our way, on a pretty regular basis.

The site was built in PHP, and we put a lot of effort into performance a year or so after I started there, using profiling tools like Xdebug and caching tools like APC to tighten up the code and prevent lots of recompilation of PHP code. The whole website ran on a single big server hosted internally (remember, late 2000s), running Red Hat Enterprise Linux, with a single link to the outside world that the entire Institute used. Once we learned our early lessons on performance, those 8 million hit days were no big deal.

However, we were going to have to step up our performance even more, and quickly. Google notified us that, for the 20th anniversary of the Hubble Space Telescope’s launch, they were going to do the following:

- Create a Hubble focused version of their Sky View map service, and link to the press releases on HubbleSITE for the most famous images.

- Create a Google Doodle for the 20th anniversary, and run it for not the typical one day, but for two days, over the weekend of April 24, 2010.

Being linked to only a few clicks away from the home page of Google for an entire weekend meant that we were going to be inundated with traffic, way more than we had ever received before. We needed a way to be able to deliver not just the linked-to press releases, but as much of the site’s content as possible, for that whole weekend.

The plan

We decided to take a multi-pronged approach:

- Ensure that all areas of the site that performed some I/O can have their output cached to static files/in memory.

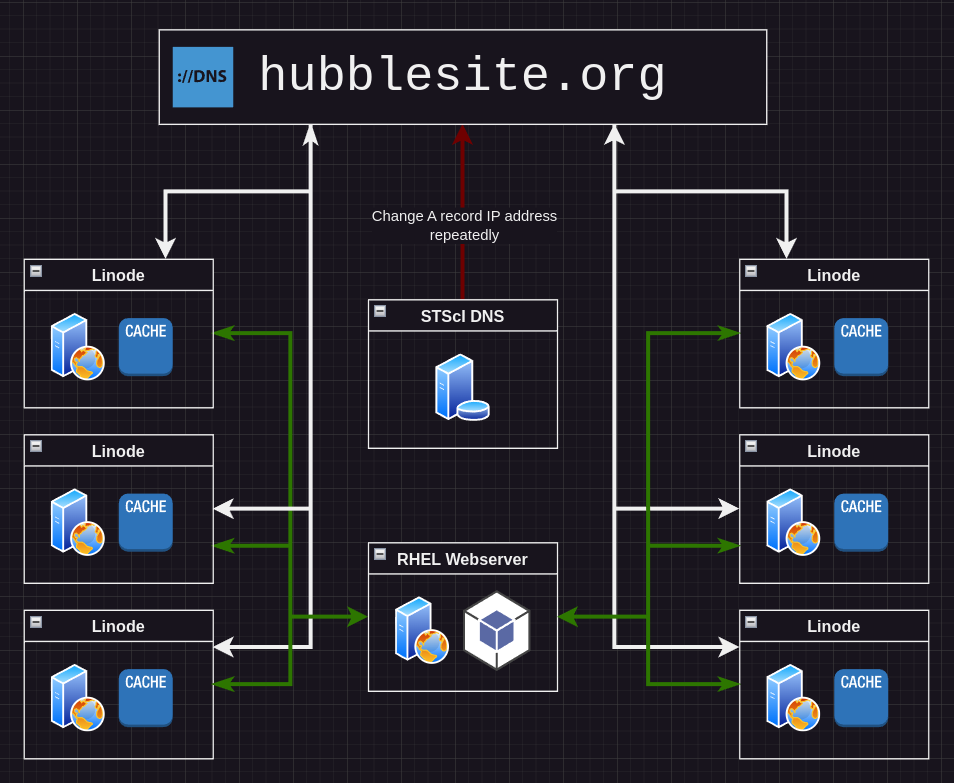

- Use round-robin DNS to attempt to distribute the load to the caching proxies as evenly as possible.

- Place caching proxies in front of that single server on the ‘Tute’s network so neither the server nor the network link melt.

I don’t remember much about the process we used to make all the PHP pages static, but it did involve being able to flip a feature flag on and off for the entire site. DNS was handled by our IT department, and they configured round-robin DNS to deliver a different IP address every so often – I forget how low the TTL was, maybe 15 or 30 seconds?

I was more involved with the caching proxy setup. This was a few years before cloud services became popular, but virtual private servers existed. We decided to get six of the smallest Linode VPS possible, one in each datacenter they had at the time, all around the world, and run Debian on them.

We used Varnish as the caching proxy server. We made sure that our Apache server and PHP code delivered the correct headers for Varnish to allow caching the content, and tested the configuration a few weeks before the Doodle was going to go live.

The results

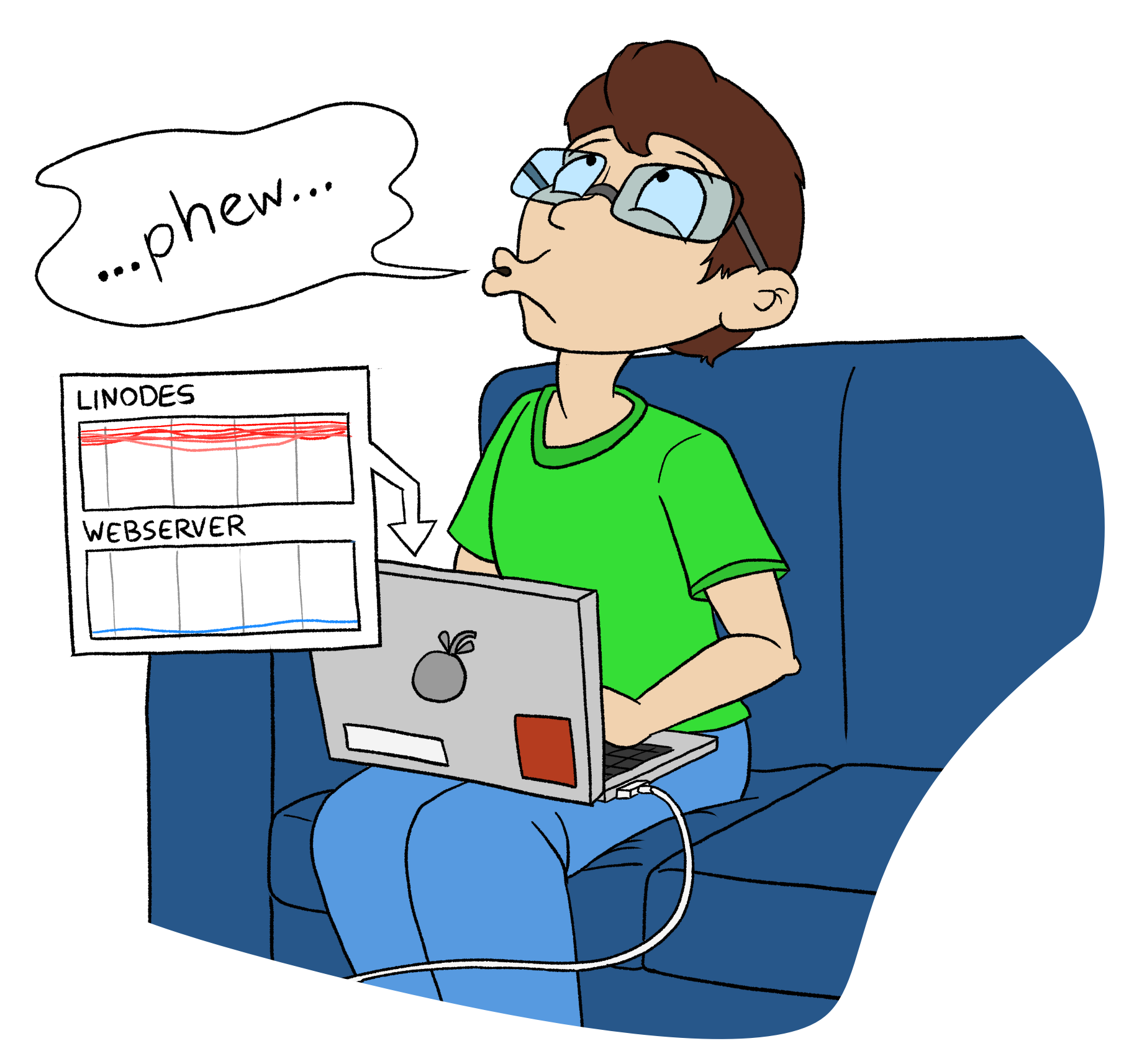

Once it was time, we flipped on read-only mode, let the local cache warm up,

and changed the hubblesite.org DNS to round-robin through the Varnish

proxies. Monitoring consisted of top and a few hand-built graphs to watch

the main webserver’s load. This was pre-SaaS monitoring services so we relied

on log files afterwards to tell us about traffic load. We only had one

misconfiguration to clean up Friday evening, but once that was done, the

cache servers and main webserver stayed up all weekend without a problem.

Once it was done, we learned we had handled around 100 million requests those two days, which was, for each day, an order of magnitude more traffic than we usually get – or, about a month’s worth of normal traffic over that weekend. We ended up keeping the caching proxy setup since it was quite inexpensive and significantly reduced the network usage in the building.